Real-Time Vision

Machine Vision in Real Time—How Does it Work?

While real-time machine vision may seem like a complex topic, it can in fact be taken one step at a time. Thus, by looking at every step individually, it actually becomes quite straightforward.

Machine vision in general means the processing of optical signals by machines. The fields of application here are as numerous as they are diverse. Ranging from production, quality assurance to vehicle engineering and medicine, virtually every technological area to some degree can benefit from “automatic seeing”. And since automation is one of the key elements in any industry, it does not come as a surprise that these fields increasingly rely on image capture and processing. In an industrial context, the term “machine vision” is used specifically as it distinguishes itself from the overarching subject “computer vision” due to higher requirements. For instance, one of these requirements for machine-based processes oftentimes is real-time capabilities. But how does machine vision work in real time?

Real-Time Machine Vision: What is the Procedure?

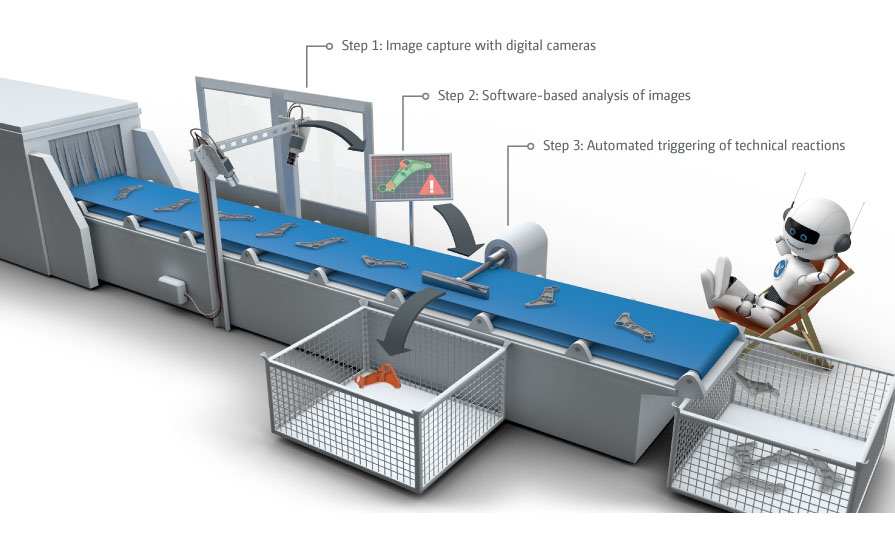

Going over the rough procedure of a machine vision operation step by step, three stages can be identified—capture, processing and reaction. A camera captures images and passes them on digitized. These images are analyzed and evaluated by specialized software according to relevant criteria. This processed image data is then able to automatically trigger technical reactions, such as by machines. What is depicted here as a simplified three-part model, is in actuality a coherent cyclical process that repeats several hundred or thousand times per second. This is where a real-time operating system can ensure that every single one of these cycles does not exceed a specific total duration. This “hard” real time, such as provided by Kithara RealTime Suite, is a prerequisite for many applications in the first place. This is achieved by avoiding any interruptions in the transition between the individual steps as well as during the computations of the image processing. In case of a conventional operating system, that would be unpreventable.

Image Capture and Real Time

Computer vision applications in industrial and research fields involve correspondingly higher requirements for the hardware used. For instance, industrial cameras, compared to regular cameras, need to be significantly more robust and resistant against external factors such as temperature, humidity, magnetic fields, vibrations, etc. Since nearly all functions of an industrial camera are controlled via a connected computer, these types of cameras are very compact and thus are easier to install into machines.

Images are either captured via so-called frame grabbers, which are specialized hardware expansion cards linked to the camera, or directly with digital interfaces such as Ethernet and USB. The latter two offer high flexibility and provide interchangeability between the different camera manufacturers thanks to dedicated vision standards such as GigE Vision or USB3 Vision. Furthermore, there is GenICam, a “Generic Interface for Cameras” that standardizes the programming of cameras even more no matter the interface technology.

The real-time operating system by Kithara uses specially developed drivers for Ethernet and USB to control GigE Vision or USB3 Vision cameras respectively in real time. This allows for image data to be acquired from the camera and to be transmitted without delay. Since such image data can come in very large sizes, proportionally-sized buffers are utilized in order to prevent the loss of data packets so as to ensure seamless data transfer.

Image Processing Software and Control Reaction

As soon as the image data has been captured and transferred to the computer, it becomes available for further assessment. Here, depending on the individual application task, specially customized programs are used, which are mostly created by employing algorithms of image processing libraries. Among the numerous functions of these libraries are for example, recognition of objects, texts, 2D codes, faces, gestures or motions. This allows for automated analysis of images as well as decision making, such as for control reactions.

As one can imagine that the utilized image processing algorithms are very complex, it does not come as much of a surprise that popular libraries include thousands of individual operators. Examples are the commercial library Halcon by MVTec or the open source OpenCV. Furthermore, there is a discernable trend towards using machine learning algorithms to implement even more complex vision applications.

Real-Time Automation Beyond the Camera

In order to execute machine vision processes in a real-time context, these libraries are loaded into a real-time system. This guarantees, that reaction times between image capture, image processing and machine reaction happen within a minimal predetermined period of time. Even control reactions, such as the movement and grabbing of a robotic arm as a consequence of an object recognition can be treated in the same real-time context with Kithara RealTime Suite. This enables developers to merge machine vision and machine logic within their application code and optimize them to each other. There is also the possibility to implement machine vision applications into higher-level automation solutions. The result is a uniform real-time system which allows for received image data to be analyzed and transformed into complex sequences and thus directly control sophisticated machine procedures.

EtherCAT® and Safety over EtherCAT® are registered trademarks and patented technologies, licensed by Beckhoff Automation GmbH, Germany.

CANopen® is a registered trademark of the CAN in Automation e. V.

GigE Vision® is a registered trademark of the Automated Imaging Association.

USB3 Vision® is a registered trademark of the Automated Imaging Association.